Retrieving, Processing, and Visualizing Enormous Email Data

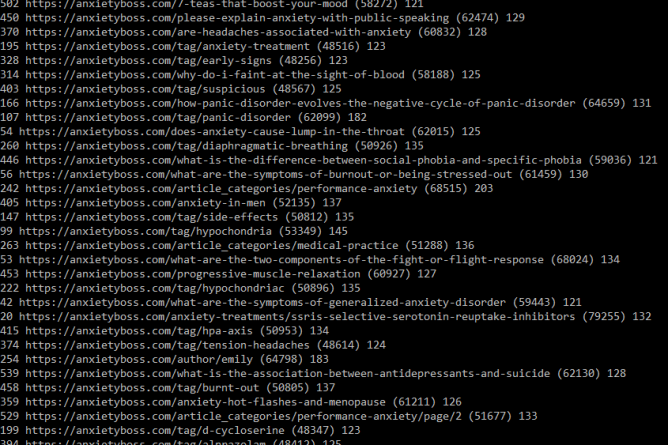

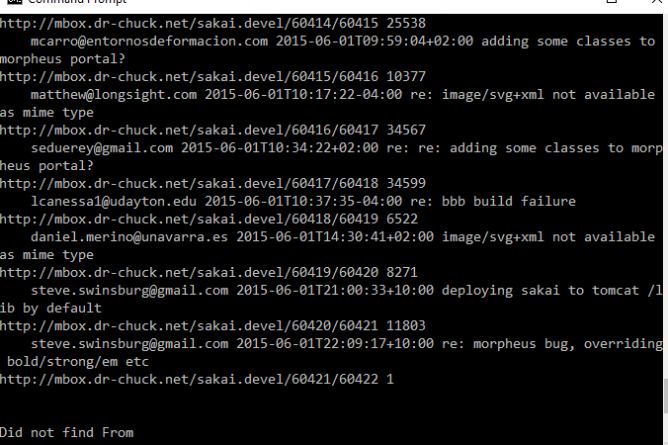

In this project, I will retrieve a large email data set from the web, and then I will place it into a database. I will then analyze and clean up the email data so that it can be visualized. I…

Read more